The anxiety of obsolescence is strong in contemporary letters, not so much because literature is actually obsolete as because any downward graph, projected out forever, goes to zero. From its corner of the culture the novel has enviously watched its competitors for public attention go through rapid advancement and innovation. The faster they go the more novels look static in comparison. Many people today playing Candy Crush on the subway or at thirty thousand feet would, just a decade ago, have been reading. Television has evolved into an amorphous creature with tendrils in all corners of our multitasking and screen-illumined daily lives. One cannot do the dishes while reading a novel, but an iPad props up just fine.

The anxiety over literature felt by writers, publishers, and readers is exacerbated by the fact that rival media are increasingly co-opting literary techniques once thought to belong solely to the novel. A slow edging out can be discerned, then projected all the way to replacement. Television is at the forefront of this movement. It’s been so successful that a 2014 New York Times essay asked, “Are the New ‘Golden Age’ TV Shows the New Novels?” I’ve heard the refrain personally, often from people who a generation ago would have been dedicated readers. Just recently a friend of mine, leaning against my bookshelf, asked, “Why should I read novels? I can satisfy any yearnings I have for fiction with TV. It’s just so damn good now.”

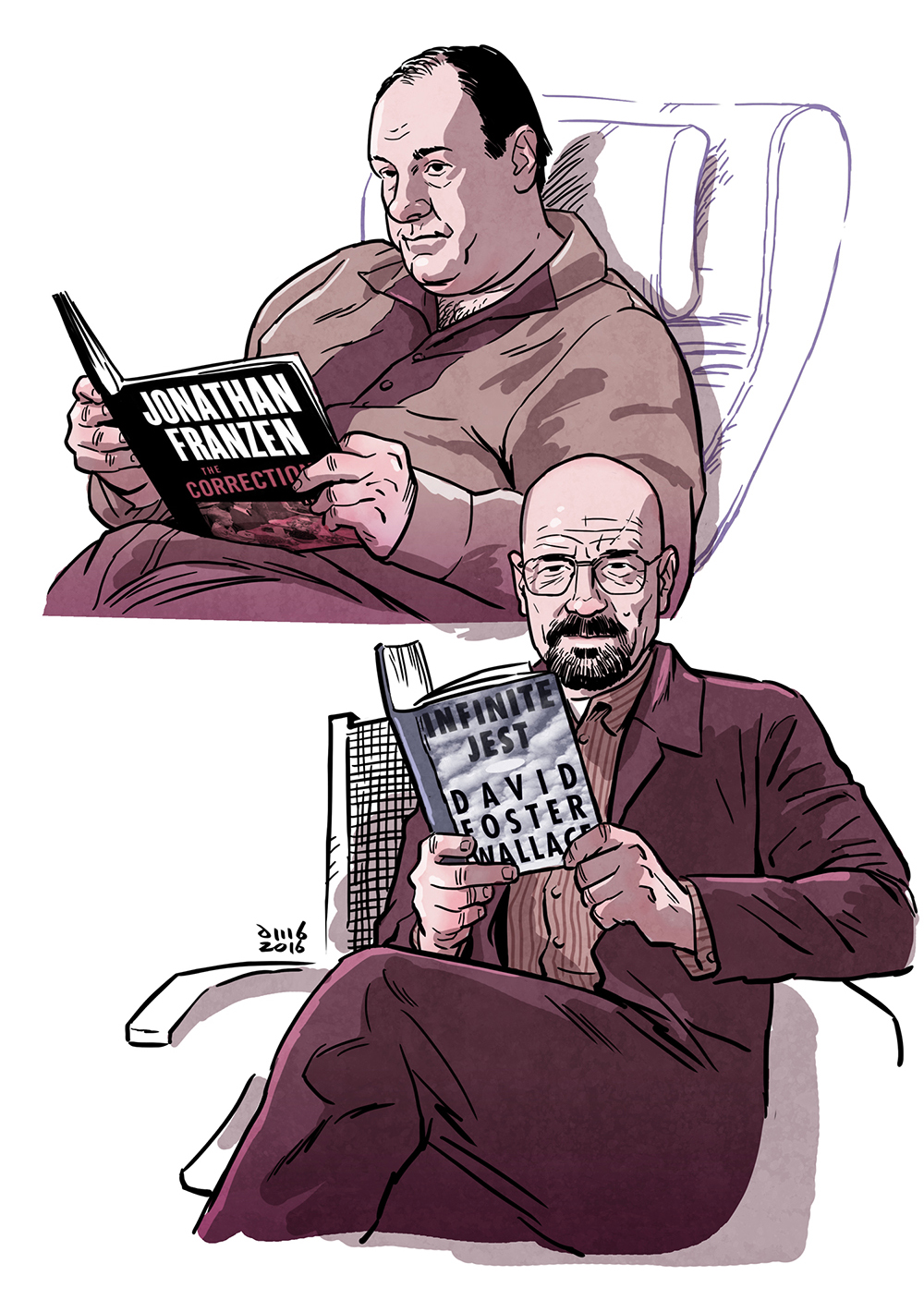

It’s not like there is an open war on. Writers watch a lot of television, and Jonathan Franzen recently admitted “I had to redefine my definition of a novel to include serial cable television” — a move that makes sense in a publishing industry where selling the screen rights to a novel is considered the diadem of success. The ascendency of Elena Ferrante was recently sealed by news of a planned HBO series based on her Neapolitan novels. The Wire, The Sopranos, Mad Men, and other New Golden Age shows have demonstrated serious dramatic chops. Writers, often fans of such shows, are also acutely aware of their dominant role in the cultural conversation, which makes it inevitable that they will develop the urge to respond to them, either by mimicry or rejection.

I wrote about this kind of “HBO anxiety” in a recent review of Garth Risk Hallberg’s novel City on Fire. Hallberg tries to appropriate many of the hallmarks of these shows: great length, diverse interconnecting story arcs, episodic structure, televisual writing built around visual “shots,” and so on — meaning that the biggest fiction debut of 2015, written by an accomplished literary critic, was in close dialogue with contemporary television.

Hallberg himself has been open about this, admitting that The Wire was a strong influence. He’s by no means alone. For example, here’s novelist Mark Z. Danielewski talking about his recently begun, ongoing, multi-volume story The Familiar, a planned series of twenty-seven books:

The Familiar is my remediation of a television series. You aren’t guaranteed several seasons of a particular show when you pitch it to the network. Pantheon gave me the green light…. The burden here is on me. That’s how television works. Let’s say so far, I’ve cleared the pilot plus a season…. Which as far as television goes is pretty good.

Or consider the dual lives of George R.R. Martin’s Game of Thrones, which has become a living metaphor for the competition between novels and television. While at first A Song of Ice and Fire, as the book series is known, was considered the uncontested canon, matters have changed now that the HBO adaptation has advanced beyond the published books. Martin has told the showrunners the outline of his ending, and they will be the ones filling it in, years before him. So then what’s canon in the Game of Thrones universe? There’s a strong case that it’s the show, with its primacy in viewership and completion and its undeniable quality, and that the novels now serve as a kind of extended edition for fans. Martin, in fact, was originally a screenwriter; it was the budgetary constraints of movie studios, their inability to put his imagination to screen, that led him to write the Song of Ice and Fire series. (Note that the more common name is the title of the TV show.) But now, with a budget of $100 million for the latest season alone, it’s the showrunners, David Benioff and D.B. Weiss, who are really writing Game of Thrones.

This is one of the signature changes in the New Golden Age: showrunners are now often writers, sometimes novelists who switched over to television. For instance, HBO’s The Leftovers, co-written by Tom Perrotta, is based on Perrotta’s novel of the same name. Showrunner can be a powerful position. It’s apparent from his interview in The Paris Review that Matt Weiner had complete artistic control over his show Mad Men. This prospect of near-total control, on top of television’s opportunity to reach audiences in the millions, is probably why novelist Zadie Smith said in a 2013 interview that every writer she knew in New York was writing a TV show. Smith herself, in collaboration with her poet husband, reported that she was writing a science fiction screenplay.

Back in 1996, pre-Golden Age, Jonathan Franzen mentioned his attempt at screenwriting in his Harper’s essay “Why Bother?” (as in: why bother writing novels?). After going through several drafts of a script, Franzen’s Hollywood agent finally brought him to realize that control would be stripped from him: any product would be arrived at by committee, a thing re-written five times and then audience-tested, a dive toward the lowest common denominator. So he abandoned it for the sake of artistic integrity. But then, in 2012, well into the New Golden Age, he put great effort into adapting his novel The Corrections into an HBO pilot. The network declined to pick it up as a series, but plans of an adaption of his new novel, Purity, are now in the works.

Over the last two decades, the encroachment into traditional literary terrain has come not just from television but also the breakneck advancement of video games. Novels, particularly fantasy and sci-fi, used to be the only place for intricate world-building. Think of Larry Niven’s Ringworld (1970), winner of the Hugo and Nebula Awards, which takes place on the engineered habitat of a giant artificial ring in space — such that, looking up, you would be looking down at other parts of the ring, miles distant. Halo, the hugely popular video game franchise launched in 2001, takes place on exactly such an artificial ring world. But this time, you could actually experience the dizziness, disorientation, and awe of gazing skyward. Instead of shooting bad guys, I spent the first half-hour just craning my character’s neck at different angles. Or consider Skyrim, a fantasy role-playing game played by tens of millions of people, all of whom were introduced to a detailed world that seemed to stretch on forever. This is exactly the sort of thing that people used to read science fiction and fantasy novels for (and the sort of thing that led Maria Bustillos to ask in a 2013 New Yorker article about “the potentialities of video games as literature”). Not surprisingly, fantasy writers like Patrick Rothfuss and Neil Gaiman are moonlighting as writers for video games.

The list goes on. Television and video games, particularly serial dramas and role-playing games, are enjoying a cultural prestige once reserved for novels. To see how radical a change in the cultural milieu this is, we need to go back to 1990, when video games were just starting out and television was still, well, really bad.

In 1990, David Foster Wallace turned a wary eye upon the relationship between the literary fiction and television of his day, in an essay called “E Unibus Pluram: Television and U.S. Fiction.” It turned out to be a manifesto for Wallace, a sketch of negative space around the enormously influential literature he would eventually write. Here is Garth Risk Hallberg in 2008, after Wallace’s suicide:

his work has mattered more to me, and for longer, than any other writer’s, and when he killed himself last week at age 46, I felt like I had lost a friend. His voice is still in my head.

That voice was just coming into being when “E Unibus Pluram” was written in 1990. Wallace’s magnum opus Infinite Jest wouldn’t be published until 1996, but it was started in 1991, and it clearly reflects the same concerns as the 1990 essay. His signature style, the voice that Hallberg heard so clearly, is synthesized linguistic anti-matter designed to explode what Wallace viewed as the cultural problems of his day. A large part of those problems concerned television — specifically, how awful and kitsch and mocking and ironical, and yet addicting, television was.

Wallace makes a specific example of the 1980s series St. Elsewhere, which was, for its time, precisely the kind of small-audience, critical-darling show that Mad Men is today. I watched some of St. Elsewhere, a series that won thirteen Emmy Awards, and it was like watching the simple cousin of one of today’s prestige dramas. Ours are slicker than St. Elsewhere, conceptually denser, and they try to make you forget they’re TV shows. But St. Elsewhere blares its clichéd nature from the pilot, filling itself with ironic in-jokes about the fact that it’s just a show. Even the ending of St. Elsewhere is a reveal that the entire series has been the elaborate fantasy of an autistic boy. The ending of Mad Men, by comparison, tries to inject itself into actual history by having us believe that Don Draper created the most famous ad of all time.

It is such differences that allow Wallace, as recently as the early 1990s, to state confidently that television is a “low art,” an opinion he says is widespread or even universal. He points to the hypocrisy of “our acquaintances who sneer at the numbing sameness of all the television they sit still for.” For Wallace, it was the fact that television was addictive low art that made him existentially concerned that Americans watched an average of six hours of it per day, and prompted him to argue that television is best understood using the language of psychological addiction. Infinite Jest instantiates this anxiety. Its driving plot element is “the Entertainment,” a video cartridge containing an experimental film so addicting people will literally waste away watching it — and what is that if not television?

This year is the twentieth anniversary of Infinite Jest’s publication, and it, along with its spiritual predecessor Brave New World, now looks to be one of the major prescient books for the twenty-first century. Both anticipate that the greatest coming change will not be advancement in the sciences or the humanities or political ideas, but in our ability to fill our leisure time with ever more potent psychological rewards. For Huxley it took the form of drugs, but for Wallace there was a clear line between drugs and a more insidious form: the screen.

At a recent scientific conference, I got the chance to wear a virtual-reality headset for the first time. As Wallace could discern so clearly, the seductively soft hand of entertainment has our civilization by the throat, and when I put those goggles on and marveled at what I saw, I felt a tightening. The solution offered by Infinite Jest to entertainment addiction is hard work and monastic concentration on some abstract entity — what Wallace referred to as “worship.” (Worship being exactly what a book as dense as Infinite Jest requires to read; the book itself tries to be a cure for what it diagnoses.)

So what would Wallace make of the fact that television has changed? Yes, the majority is still filled with irony and acidic postmodernism and real serious nihilism-type Entertainment and laugh tracks and advertisements. But prestige dramas are a movement away from this. The Wire doesn’t have any meta-irony, doesn’t play for cheap thrills or laughs: it’s a serious meditation on how the social roles of a city are occupied, emptied, and occupied again. And even though these New Golden Age shows make up only a small portion of television, they are targeted quite successfully at a novelist’s natural audience — educated, artistically sympathetic adults — and thus are exerting a strong influence on fiction writers.

If the anxiety that drives Infinite Jest is a concern for the distracting and corrosive quality of television as a “low art,” the anxiety that drives recent novels like City on Fire or The Familiar is that television has a new displacing quality as a “high art.” The easy scoffing at TV that Wallace cites among cultural sophisticates no longer exists. And in the face of shows like Mad Men, Six Feet Under, The Sopranos, The Wire, Breaking Bad, Friday Night Lights, Game of Thrones, and True Detective, what exactly are novel writers doing that is so superior to their rivals? That’s a scary question even to consider, I think, for most novelists. As scary as television stealing audiences at the rate of six hours a day, but scary in a different way, because it presents a direct question of action: Given a promising story and cast of characters, and setting aside all practical considerations, should one write it as a TV show or a novel? Or perhaps a video game, or an interactive virtual-reality scenario? This existential question haunts a lot of contemporary fiction, which cringingly acknowledges that a reader might ask: Why is this a novel at all?

In 2012 Hallberg himself wrote a New York Times Magazine essay titled, appropriately enough, “Why Write Novels at All?” He notes a sense of techno-driven existential anxiety among modern novelists like Franzen, Wallace, Smith, and Jeffery Eugenides. Their fear about the irrelevance of modern literature, Hallberg argues, can “be traced back to the French sociologist Pierre Bourdieu, who at the dawn of the ’80s promulgated the notion of ‘cultural capital’: the idea that aesthetic choices are an artifact of socioeconomic position.”

Bourdieu offers a seductive Marxist political answer to the question “Why do people read novels?” To put it bluntly: well-educated, upper-class people have the tastes they do — like novel-reading — to distinguish themselves from the lower classes, and thus maintain the social hierarchy. Hallberg argues that Bourdieu has had widespread influence, pointing to a cultural relativism that loudly proclaims no art better than any other. Indeed, Franzen’s division of all literary works into the categories of either “contract” or “status” novels implies he has bought Bourdieu’s hypothesis hook, line, and sinker. Appeals for the unique aesthetic status of, say, the novel, are little more than rationalizations for snobbery.

What the classist argument misses is a truth made familiar by Marshall McLuhan: the medium matters. Transmit information over a channel, and the properties of that channel matters. How you say something shapes what you say. Neil Postman gives a simple example of this in Amusing Ourselves to Death:

consider the primitive technology of smoke signals. While I do not know exactly what content was once carried in the smoke signals of American Indians, I can safely guess that it did not include philosophical argument. Puffs of smoke are insufficiently complex to express ideas on the nature of existence…. You cannot use smoke to do philosophy. Its form excludes its content.

Broadening this view, it’s arguable that every medium culture has invented, from the scientific paper, to the essay, to the tweet, to the television show, to the radio program, to the news article, is a mental tool. Each has a unique disposition that strongly biases the content transmitted through it, both obviously and subtly.

Sometimes, the dispositions of media overlap. For example, prose and television are both one-way: the recipient cannot respond. Or media can be analyzed by their differences. Consider the dispositions that television, and image-based media in general, have opposite to those of prose. Prose requires a certain activity on the part of the consumer: one can zone out and still watch, but not still read. In that activity prose is further disposed to engender reasoning: following a logical sequence is critical for maintaining attention during reading. This is in turn due to prose’s propositional nature: prose is always implicitly asserting certain things as true or false. Prose’s propositional nature is helped out by the presence of counterfactuals: contrary-to-fact statements like, There but for the grace of God go I, which show up all the time in normal prose. (Can you imagine trying to show directly the important things that didn’t happen on a TV show?) Counterfactuals bring us to causation, impossible to truly portray in television because mere successive appearances show only association, as Hume pointed out, whereas prose is dense with the arrows of causal attribution. Counterfactuals and causation together are aspects of the extremely modal nature of prose: its richness in sufficiency, necessity, probability, possibility, relations that can’t readily be portrayed in a cinematic shot. And what about generalization? Everything on a television screen is one particular thing, never humankind but rather individual human beings. And generalization is in turn just a special case of abstract objects: Platonic-realm denizens like integers, the perfect husband, the perfect wife, freedom, etc. Then of course there’s also analogy: metaphors, similes, and so on, because prose no matter how plain is always a stream of metaphors, a firehose spew of intellectual comparisons. Such promiscuity in analogy reflects prose’s richness in concepts: an idea, a conclusion, an aside, a digression, a point — all things that television scenes don’t have and can’t come to. And finally, prose is atemporal and aspatial: it has no fixed perspective in space or time, unlike a cinematic shot, allowing for an unrivaled range of description.

The intellect uses the tools given to it. Time spent reading has all sorts of well-documented beneficial effects, cognitive and otherwise. And this view of media as differentiated classes with a myriad of dispositions and constraints goes directly against Bourdieu’s cynical assumption that different media are interchangeable status widgets. Whatever the class-distinguishing characteristics of prose, there are supra-social reasons for its existence and use. But what of novels, specifically?

Under the reign of a pop-Bourdieuvianism which maintains that artistic tastes are social signifiers that merely reflect power structures — which are evil and should be dismantled — writers have feared being branded elitists if they promote their art. Some writers have given as their justification for continuing to churn out novels the highly safe, totally non-elitist answer originally given by David Foster Wallace: novels are a cure for loneliness.

As a rallying cry it’s been picked up by literary luminaries concerned about the fate of the novel, such as Franzen, Smith, Eugenides, James Wood, and, most recently, Garth Risk Hallberg. The slogan is undoubtedly on to something, but it also makes literature seem to be only for the bullied, the outcast, the fragile, the friendless, the geek. If readers are no longer lonely, do they no longer need novels? And then, as Wallace himself pointed out, television works as a loneliness cure too: “lonely people find in television’s 2D images relief from the pain.” Wallace managed to distinguish novels from television by declaring that the curative power of television, because of its addictiveness, rampant irony, and corrosive low-art qualities, was really snake-oil nostrum. But television has changed. Binge-watching The Wire while drinking wine with one’s significant other probably doesn’t send people into the same depressive spiral of self-loathing that Wallace experienced from binge-watching a Cheers marathon alone in a hotel room.

Hallberg’s City on Fire is an apotheosis of Wallace’s old line of thinking, even ending with the explicit authorial statement: “I see you. You are not alone.” Hallberg seems to want things both ways: fiction with the appeal of prestige TV and the curative rationale of great literature. So when does Hallberg think a novel cures loneliness? It’s when a novel

requires me to imagine a consciousness independent of my own, and equally real. So far, our new leading novelists have cleared this second hurdle only intermittently…. [W]e encounter characters too neatly or thinly drawn, too recognizably literary, to confront us with the fact that there are other people besides ourselves in the world, whole mysterious inner universes.

But New Golden Age television shows can be just as rich in characterization as any novel. How many literary characters are really that much more fully characterized and complex than Don Draper? And viewers spend almost a hundred hours with Don Draper, and readers at most only a few dozen with Don Gately.

Philosophers Richard Rorty and Martha Nussbaum have given a defense of the novel similar to Wallace’s and Hallberg’s, advocating novel-reading as a sentimental education in empathy — a view echoed by President Obama in a recent interview with Marilynne Robinson. Yet prestige dramas too can evoke and teach empathy: The Wire with regard to lives torn by drugs and the drug wars, Mad Men to the man in the gray flannel suit, The Sopranos to the mobster, and so on. Empathy is hardly unique to novels, and plenty of great novels have been written by heartless bastards.

So novels aren’t just for entertainment, nor are they cultural widgets for class distinction, nor are they for curing loneliness, nor for portraying characters who are “not recognizably literary” in a quest to teach lessons in empathy. Which brings us back, at last, to the question: What’s so great about novels? Why are they worth spending time on even as the forest of media grows denser? The answer lies in the uncanny magic cast by novels, above and beyond their basic qualities as prose.

It is unsurprising that musings over the novel’s place in culture keep circling around solipsism. The novel is, of all the tools of the intellect, the one that brings us closest to another’s consciousness. It does this through its exploration of mind space: the realm of possible conscious experiences and their relationships to external, physical, and social contexts.

Consider, for example, the narrator as the mind within the prose of a novel. The narration of a novel isn’t just distinguished by its force and omnipresence, but also by its fundamental athleticism: more than just observing the narrator’s experiences, the reader imaginatively participates in them. In this way novels are implicit lessons in thought, learned by retracing the frozen curves of cognition that narration leaves behind. In the retracing we gain something, learning a mode of thinking often called a “style.” It’s the kind of learning that prompted Hallberg to say of Wallace, “His voice is still in my head.” A good narrator represents the kind of mind that other minds want to spend a lot of time with.

Even so, we are as likely to find a mental companion in the narrator of Montaigne’s Essais as we are in any fictional work. To truly understand the unique way that the novel as a medium exposes us to other minds requires investigating the nature of minds themselves. This is something I, as a scientist who studies consciousness, happen to do professionally.

Contemporary studies of consciousness are framed around philosopher Thomas Nagel’s classic 1974 article “What Is It Like to Be a Bat?” In it, Nagel argued that there is an irreducible first-person aspect to consciousness: its experiential quality. That is, consciousness contains a type of information, the what-it-is-like-ness of experiences, that can never be captured by external, third-person observations, such as information about brain states. Philosophers call these first-person aspects of our minds qualia. What it is like to see the color red, to kiss someone, that feeling of coolness after a pillow is flipped over — all of these are qualia. Here’s another, a particular kiss from Hallberg’s City on Fire:

She watched her hands press themselves to his face. And then she was drawing it forward. His whiskers scraped against her chin, and she could taste chewing gum and Lagavulin.

Chewing gum and scotch. Do you know the combination? How would you communicate what it is like to taste those two tastes at once to someone who had never tasted either? What data could you possibly transmit? And there’s the rub. Qualia are mysterious, accessible only to the person who experiences them. As intrinsic phenomena, they can be told but never shown. From the extrinsic perspective — science’s mode of inquiry since Galileo explicitly bracketed the observer out of the method — the world is a set of surfaces: It’s everything you can photograph or record, picture and image, measure and quantify; it is appearances and successions, composed entirely of extensions. Picture the cogs of a machine, all that Newtonian physics. Like science, it is only this extrinsic perspective that television portrays. And the extrinsic view on the world is itself, like television, a screen, a moving image that the deep noumenal structure of reality projects its shadows on. Mere pixels. Scientifically and philosophically, we don’t know how the intrinsic and the extrinsic coexist. We don’t know how reality accomplishes such a magic trick, but we do know that the world has two sides.

Flip the extrinsic side over and you see the intrinsic world: the character and content of consciousness. Wallace, in his short story “Good Old Neon,” described the intrinsic world as “the millions and trillions of thoughts, memories, juxtapositions … that flash through your head and disappear.” He envisaged this world as composed of long descriptions written in neon, “shaped into those connected cursive letters that businesses’ signs and windows love so much.” At all times there is a vast internal stream of such neon lettering flowing by, uncaptured and unrecorded, from humans and animals alike, a deluge all being writ at once, a neon rain in reverse, the great veiled river of Earth. It is invisible and unquantifiable and will continue for as long as there is consciousness.

Fiction writers in their craft do something unique: they take the intrinsic perspective on the world. Reading fiction allows you to witness the world of consciousness that normally hides beneath the scientifically describable, physically modelable surface-world of appearances. From the intrinsic perspective of a novel, the reader can finally observe other selves as they really are, as complicated collections of both third-person and first-person facts. Doing so would normally violate all kinds of epistemological rules: from the extrinsic world you can infer but can’t directly know someone’s sensations and thoughts, not the way you directly know your own experiences.

Fiction in its design circumvents this, tunneling holes through the normally insurmountable walls partitioning up our reality. Writers get around the fact that internal states are not externally accessible by literally creating characters that exist only within their own minds. A fiction writer working on a novel is a walking Escher drawing. Authors are minds that create artificial minds within their own minds so they can directly transcribe the artificial minds for other, third-party observer minds. Which makes the novel the only medium where there is no wall between the intrinsic and the extrinsic. A novel is the construction of a possible world where thoughts and feelings are just as obvious to an observer as chairs and tables. Everything is laid out into language, represented the same way. The novel is a fantastical land where what philosophers call “the problem of other minds” does not exist. What novels do is to solve the predicament of conscious beings who are normally suspended in an extraordinary epistemological position, allowing us access to what is constantly hidden from us.

To be clear, I’m not suggesting some scale on which novels that focus more on the intrinsic world — like stream-of-consciousness novels or those devoted to minute psychological description — are somehow better. Total immersion in the intrinsic world is ultimately just as empty as total immersion in the extrinsic. The promise of a novel is that, through the universal representative powers of language and the tricks novelists employ in creating viewable minds, the whole of reality can be seen at once. No other medium gets close.

If science is the “view from nowhere,” as Thomas Nagel called it, then literature is the view from everywhere. Neuroscience and physics have failed to find a place for the phenomenon of consciousness — a failure that strongly implies that our universe is not simply atoms bouncing around like billiard balls, not just empty syntax or rule-following computation. And yet, for all its mystery, consciousness is a substance through which fiction writers swim along like old fish.

“Morning, boys. How’s the water?”

Will people still read novels on spaceships? It makes for a beautiful image: the silhouette of a human curled up with a good book, occasionally glancing out a porthole at the stars. But the perpetual state of panic that surrounds literature makes the scene more difficult to imagine than it should be. Every new communication or entertainment medium, from radio to television to video games to social media to augmented reality, has been heralded as the destruction of the novel. Yet literature remains.

The recent history of video games, the medium undergirded by the most rapidly developing technology, is instructive as to why. Back when Wallace wrote “E Unibus Pluram,” video games were comparatively basic. Thanks to Moore’s Law they have advanced in the intervening time from the pixelated simplicity of the first Final Fantasy to the photorealistic complexity of Skyrim. Yet, for those games that aim higher than mere entertainment, the struggles of the medium to incorporate the intrinsic world are apparent.

Consider what is still widely regarded as the most “high-art” role-playing game of all time: Planescape: Torment, whose 1999 release prompted the New York Times to muse that computer games might come “to have the intellectual heft and emotional impact of a good book.” But the main reasons that Planescape: Torment is high art are the in-game descriptions and dialogue, with a total estimated word count approaching one million, far longer than Infinite Jest. The reason it’s able to achieve such depth is that it is essentially a choose-your-own-adventure philosophical novel wrapped in a skin of images. Its sequel, Torment: Tides of Numenera, is scheduled for release early in 2017. Despite all the doublings in processing power in that eighteen-year interval, the new game is structured exactly as its predecessor was, except now the skin of images looks a bit nicer. No one has come up with anything better.

Certainly, computers are only going to increase in their processing power. Mind-blowing graphics are being followed by virtual reality, and maybe by the end of the century we’ll have holodecks or something, and so on. Television, or rather the twenty-first-century entertainment stream that is subsuming television, is increasing in sophistication in its effects, production, and distribution. Our ability to model, map, and represent the extrinsic world — and to create entertainment from the technologies that do so — is continually improving. But stacking more electronic switches onto a smaller chip doesn’t give you any more access to the intrinsic world, nor does making a 2D film into a 3D one. Advances in modeling the extrinsic half of the world don’t translate to advances in modeling the intrinsic half. More screens only move us closer to the ultimate screen of extrinsic reality. Syntax only gets you closer to more syntax. The reason the novel can’t be replaced by other media is because it is an intrinsic technology. It competes in a different sphere. When the astronauts on the International Space Station get books beamed up to orbit (which they do), it isn’t an anachronism: all the technologies are up to date.

Writers, then, should get over any fear they have about being artistically outdone by other media, especially by something as trapped in the screen of the extrinsic world as television. Ignored to death, as David Foster Wallace and Aldous Huxley warned? That they can be fearful of. But replacement in artistic effect? Never.

Novels will always have a place because we are creatures of both the extrinsic and the intrinsic. Due to the nature of, well, the laws of reality, due to the entire structure and organization of how universes might simply have to be, we are forced to deal with and interact entirely through the extrinsic world. We are stuck having to infer the hidden intrinsic world of other consciousnesses from an extrinsic perspective. This state leaves us open to solipsism, as Wallace suggested in saying that novels are a cure for loneliness. But the loneliness that novels cure, unlike television, is not social. It is metaphysical.

At the same time, our uncomfortable position — both flesh and not — also puts us in danger, beyond just that of solipsism, of forgetting the intrinsic perspective, of ignoring that it holds an equal claim on describing the universe. In contemporary culture there has been a privileging of the extrinsic both ontologically and as explanation. We take the extrinsic perspective on psychology, sociology, biology, technology, even the humanities themselves, forgetting that this perspective gives us, at most, only ever half of the picture. There has been a squeezing out of consciousness from our explanations and considerations of the world. This extrinsic drift obscures individual consciousnesses as important entities worthy of attention.

Recently I overheard a conversation between two psychiatrists in the hallway next to my lab. One doctor was describing a patient, a young woman whose primary problem seemed to be that she was spending too much money on clothes. For the next five minutes the two debated what medications to put her on. Extrinsic drift is why people are so willing to believe that a shopping addiction should be cured by drugs, that serotonin is happiness or oxytocin is love. It’s our drift toward believing that identities are more political than personal, that people are less important than ideologies, that we are whatever we post online, that human beings are data, that attention is a commodity, that artificial intelligence is the same as human intelligence, that the felt experience of our lives is totally epiphenomenal, that the great economic wheel turns without thought, that politics goes on without people, that we are a civilization of machines. It is forgotten that an extrinsic take on human society is always a great reduction of dimensions, that so much more is going on, all under the surface.

Given its very nature, the novel cannot help but stand in cultural opposition to extrinsic drift. For the novel is the only medium in which the fundamental unit of analysis is the interiority of a human life. It opposes the unwarranted privileging of the extrinsic half of the world over the intrinsic. It is a reminder, a sign in the desert that seems to be pointing nowhere until its flickering neon lettering is read: There is something it is like to be a human being. And what it is like matters. The sign points to what cannot be seen.

How to keep this defining quality of fiction going into the future? There seem to be two paths for the novel here. One is to turn inward and concentrate solely on the mind of the narrator, a banner of the self taken up in the rise of autofiction by writers like Karl Ove Knausgaard, Sheila Heti, and Ben Lerner. Although this comes with the inherent risk of solipsism, it also allows for the deepest of mind-melds with the narrator. The second path is to expand outward and try to capture the essential property of fiction as “consciousness play.” This offers the strongest rejection of extrinsic drift by showcasing the hidden intrinsic side of the world — taking the view from everywhere — and thus examining its complexities and interactions as if there were no fundamental barrier. On both paths are works that engage consciousness with the same radical strength and freedom of a novelist, but that also eschew or deemphasize the long dramatic arcs and genre-esque literary structures that other media are growing richly marbled on.

Either way, by turning solely inward or outward to other inwards, the future of fiction may be works that, if they were adapted into a series on HBO, would lose precisely what makes them so special — novels that are untranslatable across media because they are fiction, memoir, digression, exploration of mind space, and essay put into a blender. Not that such untranslatable novels are new. All avant-garde work is really return; the introduction to my version of Moby-Dick describes it as:

simply too large a book to be contained within one consistent consciousness subject to the laws of identity and physical plausibility. The narrating mind (called Ishmael at first) hurtles outward, gorging itself with whale lore and with the private memories of men who barely speak.

Of course, no matter what writers do, extrinsic drift might make the novel seem culturally obsolete, even when it’s not. Fiction might still perish as a medium, even if it doesn’t deserve to, brought down by any number of causes. It could be made unappealing from without by minds warped by the supernormal stimuli of television, video games, virtual reality, and the constant stream of entertainment from the Internet. Or it could be finished off by an attack from within, by those who want literature dismantled for the greater good so that it can be rebuilt as a small subsidiary arm of the political left, preaching to the choir. Or the novel may die the boring death of being swallowed up by the metastasizing bureaucracies of creative writing programs, joining contemporary poetry in a twilight that lingers only because professors of writing teach students to become professors of writing. And so it goes.

But at least, if the novel falls, it won’t be because of its artistic essence. It won’t be replaced in its effects by equivalent television or video games or any other extrinsic medium. If the novel goes, it will be because we as a culture drifted away from the intrinsic world. Left without the novel our universe will be partitioned up, leaving us stranded within the unbreachable walls of our skulls. And inside, projected on the bone, the flicker of a screen.