The Neuroscience of Despair

Notice: Undefined index: gated in /opt/bitnami/apps/wordpress/htdocs/wp-content/themes/thenewatlantis/template-parts/cards/25wide.php on line 27

The trouble with seeing depression solely as a brain malfunction

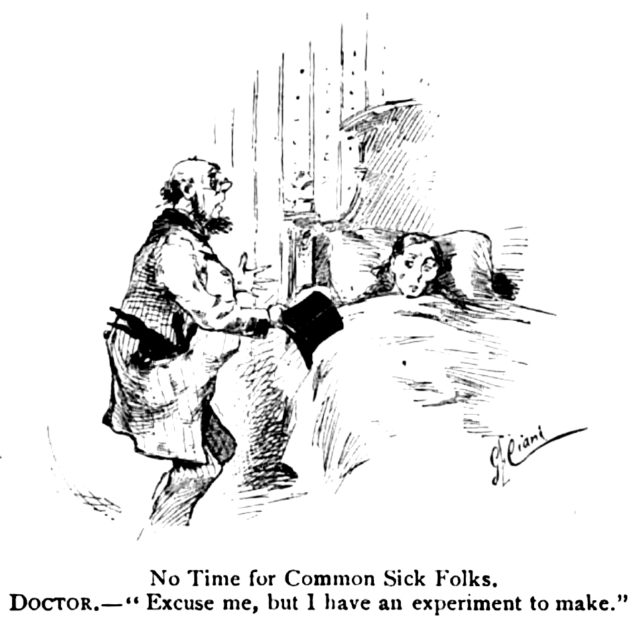

Criticism of medicine as centered in molecular biology and technology, and prone to neglect the personal and social dimensions of health and illness, has a long history. Already in the 1880s, at the very moment in which medicine was being reconstituted by discoveries from laboratory science, there was pushback. Puck, the famous American magazine of humor and political satire, ran a panel of cartoons about medicine in 1886, one with the caption “No Time for Common Sick Folks.” The drawing shows a doctor in a lab coat leaving the bedside of a patient, hat in hand and rabbit in pocket, with the apology, “Excuse me, but I have an experiment to make.” A few years later, neurologist James J. Putnam, in an address to the Massachusetts Medical Society, observed that a concern to treat “not the disease only, but also the man” was a “familiar sentiment that often falls so solemnly from the lips of older members of the profession.” Ever since, medicine has been hailed for its extraordinary explanatory and technical successes while at the same time generating considerable discontent. Against a narrow biologism and procedure-orientation, critics have argued for more socially oriented and humanistic approaches.

Why have these alternatives not gained more traction? Why have socially oriented and integrative approaches, despite their long appeal, remained marginal? Why, to turn the question around, does medicine continue on a course characterized by reductionism, mechanism-based explanations for clinical syndromes, and heavy reliance on technological solutions, despite arguments for change? No answer to these complicated questions can hope to be remotely complete, but I want to frame a general explanation by considering the powerful appeal of two enduring legacies, one from the seventeenth century and one from the nineteenth. Each is familiar enough. Philosophers and theologians have often reflected on the implications of seventeenth-century natural philosophy, particularly the works of Francis Bacon and René Descartes, to understand the commitments of modern science and medicine. Historians more commonly concentrate on the nineteenth-century changes that joined medicine with the physical and life sciences and gave birth to what we now call the “biomedical model” — a set of ideas that have structured thinking about disease and treatment ever since, often reducing medicine to a technical, scientific discipline. These two legacies together have given reductionist medicine a distinct cultural authority.

But this authority is also rooted in certain modern preoccupations: the valuation of health, which has increasingly become an end in itself; the “war against all suffering,” to use Ivan Illich’s phrase; and the project of self-determination. These preoccupations lay down powerful moral imperatives, which help to account for both the continued assertion of the biomedical model and for its extension over more and more areas of our lives, an extension held back only by the limits of our current technological powers.

The story of biomedicine begins with the birth of modern science in the seventeenth century. The philosophical part of the story is particularly relevant here. Seventeenth-century natural philosophy (the precursor of natural science) articulated a revolutionary new stance toward the world, elaborated with particular clarity and influence by Francis Bacon, René Descartes, and Isaac Newton. The new stance involves a rejection of traditional understandings of final causes and of the universe as a hierarchy of meaningful order, while affirming an objectified conception of nature — a neutral domain capable of mechanistic explanation and, most important, prediction and instrumental manipulation. For Bacon, as for Descartes, the new natural philosophy begins with skepticism — setting aside preconceived notions — and brings knowledge of and power over nature. And for both it originates in a deep moral imperative to serve human well-being and better the human condition.

Philosophers have interpreted this moral imperative in different ways, but it includes at least two principal directives. The first is an injunction to relieve suffering and conserve health, and the second is an injunction to extend emancipation — from fate, from social constraint, from the authority of tradition — and self-determination. These goals are particularly clear in the writings of Bacon and Descartes, who formulate their projects primarily from a theological conviction that an instrumental approach to nature is required for the sake of God’s glory and human benefit. But even as these theological beliefs are slowly stripped away in the succeeding years, the humanitarian imperative remains, and in a sense expands, as the relief of suffering and freedom from necessity become ends in themselves.

The cure of disease is integral to this enterprise from the beginning. The remediation of illness, the conservation of health, and the prolongation of life are central concerns of Bacon, especially in his later works, including “New Atlantis” (1627). So too for Descartes, who in the Discourse on Method (1637) observes that “everything known in medicine is practically nothing in comparison with what remains to be known.” He is confident that “one could rid oneself of an infinity of maladies, as much of the body as of the mind, and even perhaps also the frailty of old age, if one had a sufficient knowledge of their causes and of all the remedies that nature has provided us.” These heady ambitions decisively shaped the emerging scientific revolution. They are, in an important sense, what made the whole effort worthwhile.

The drivers of the revolution were the societies dedicated to scientific research that began to appear in the mid-seventeenth century. The one that emerged in England in 1662, the Royal Society, was directly animated by Bacon’s thinking that the new science would be a public and collective endeavor. Its motto was “Nullius in verba,” roughly “take nobody’s word for it,” a clear indicator of the break with traditional authority.

Over time, the Royal Society helped to institutionalize the scientific enterprise, and many notable British scientists (and some early American ones, like Benjamin Franklin) were Fellows of the Royal Society, including Isaac Newton, who was its president for more than twenty years. Similar organizations sprang up subsequently in France, Germany, and elsewhere. Collectively, they had a hand in most of the inventions that produced the Industrial Revolution. Later, Fellows of the Royal Society would be instrumental in two of the early great breakthroughs in modern scientific medicine: Edward Jenner with the smallpox vaccine (1790s) and Joseph Lister with antiseptic surgery (1860s).

Shortly after the founding of the Society, one of its members, Thomas Sprat, wrote The History of the Royal Society. Heavily influenced by Bacon, whose image appears in the frontispiece, the book provides a good window on Baconian assumptions at work with respect to medicine. Sprat had to put his writing on hold when the Great Plague broke out in 1665, which was followed and partly stopped by the Great Fire of 1666 (the blaze consumed many infected rats). This outbreak of bubonic plague killed some 20 percent of the population of London, while the Fire destroyed as much as 80 percent of the city proper. When Sprat resumes his writing, he comments on how the tragedies have spurred him to complete his book: “it seems to me that from the sad effects of these disasters, there may a new, and a powerful Argument be rais’d, to move us to double our labours, about the Secrets of Nature.” He notes that the Royal Society had already been working on the improving of building materials and that the disasters should move us “to use more diligence about preventing them for the future.” Although medicine had no remedies for the plague, he is confident a cure may yet be found. He then offers a moral point that is central to the Baconian outlook:

If in such cases we only accuse the Anger of Providence, or the Cruelty of Nature: we lay the blame, where it is not justly to be laid. It ought rather to be attributed to the negligence of men themselves, that such difficult Cures are without [i.e., outside] the bounds of their reason’s power.

Sprat was a clergyman, and his comment about Providence reflects a belief in our God-given power to free ourselves from subjection to fate or necessity by gaining mastery over nature: No disease is necessarily incurable; no suffering necessarily unpreventable. At the same time, he asserts our complete and urgent responsibility to develop and use that power for human good. Passively accepting our limitations and not exercising our power to overcome them is a form of negligence. It is our positive moral obligation to engage in “much Inquiry” — the rigorous application of scientific methods — and so to unlock nature’s secrets and discover the means to relieve suffering.

In delineating which matters the Society saw as its proper province, Sprat anticipates that the new science can and will extend beyond the body to the soul. While the study of the human body comes within the Society’s purview, it does not discuss religion, politics, or the “Actions of the Soul.” It omits these aspects in part because it does not wish to encroach on other fields of study — “Politicks, Morality, and Oratory” — which are concerned with these domains, but even more so because human “Reason, the Understanding, the Tempers, the Will, the Passions of Men, are so hard to be reduc’d to any certain observation of the senses; and afford so much room to the observers to falsifie or counterfeit” that the Society would be “in danger of falling into talking, insteed of working.” Nevertheless, “when they shall have made more progress, in material things, they will be in a condition, of pronouncing more boldly on them [questions of soul and society] too.”

Sprat distances himself from the fully reductive, mechanistic account of human nature that others of his time were already beginning to propose. He believes the human person is a “Spiritual and Immortal Being.” Still, his empiricism resists limits, and though human reason and will and emotion are hard to reduce to sense observation, he seems to think it is possible, and that as experimental and other scientific work progresses it will get easier to study the soul empirically. Two incipient ideas are at work here that less religious minds would subsequently embrace with fewer reservations. First, the objectification and mechanization of nature includes human nature. Questions of meaning will (in time) yield to questions of how things work. And, second, what is real is what can be apprehended by the senses. That which is real has a position in physical space and thus, in principle, can be measured.

Sprat’s exposition, then, outlines some key features of Bacon’s project and their implications for medicine. Many of the ideas, of course, have widely influenced modern science and so also medicine to the degree that it has drawn on the techniques and orientation of science. The foremost idea is the disenchantment of nature and the new criteria for conducting and appraising inquiry: a neutral world of facts, ordered hierarchically and reducible to objective, physical processes obeying mechanistic and predictable laws. Human values or goods, by contrast, are treated as inherently subjective, mere projections onto this world that can (and must) be banned as much as possible from the reasoning process by methodical disengagement. At the same time, however, the very adoption of this naturalistic outlook is infused with humanitarian goals and moral valuations. The whole point is to foster human emancipation and relieve suffering, bringing technological control over all of nature, and making it, in Sprat’s quaint phrase, “serviceable to the quiet, and peace, and plenty of Man’s life.”

So in an important sense the Baconian outlook, despite its apparently instrumental and value-neutral approach, itself becomes a basis for social and moral judgments. Its open-ended commitment to relieve suffering trumps other ways of thinking about the limits of medicine or bodily intervention, for instance convictions about the body as ordered by certain moral ends.

Further, by mechanizing human nature and materializing reality, the new science takes upon itself the power to recode human experience. Nature for Bacon and his followers is indifferent to human purposes, a world of objects that is contrasted with subjects and subjective meaning. Dealing with the physical, the concrete, the real avoids the “danger of falling into talking,” as Sprat puts it, and guarantees that one is outside the subjective domains of morality, religion, and politics. The corollary is that when those domains, having to do with the “Actions of the Soul,” are reduced to their physical mechanisms, they are removed from the subjective to the objective and neutral realm of nature. And if the real has a location in physical space, then whatever cannot be shown to occupy such a space is less real if not unreal. The materialist reduction, then, is imbued with the power to establish a kind of neutrality with respect to questions of the good — what is not religious or moral or cultural or political — and objective reality.

To this general outlook that medicine inherits from the scientific revolution we must add another more specific legacy, one that emerged in the historical moment when that revolution finally reached medicine.

Despite the great hope for medical progress at the very heart of the scientific revolution, the fruits of the revolution came late to medicine. This is surprising and testifies against the popular notion that the triumphs of science and the growth of its authority have been on a more or less linear trajectory since the seventeenth century. They have not. What we regard as modern medicine begins in many respects to come into its own only in the late nineteenth century. Its development is in part a story of breaking free from the tradition of medicine going back to the second-century physician Galen, which offered a comprehensive and holistic account of health and disease. While in physics and astronomy Renaissance-era discoveries led to the replacement of theories tracing back to the Greeks, in medicine such seventeenth-century discoveries as William Harvey’s work on the circulation of blood and Thomas Willis’s on the autonomic nervous system were simply incorporated into revised versions of Galen’s model. Even after Edward Jenner’s vaccination for smallpox had become widely accepted, it was still situated within a therapeutic regime that related it back to the economy of the whole body.

The genius of the Galenist system resided in its holism, according to which illness is idiosyncratic and unique to each individual; it is a disharmony, imbalance, or abnormal mixture of the four humors that each individual has in some natural and harmonious personal ratio. The humoral balance can be disturbed by any number of factors, including exposure to “noxious air” (or “miasma”; this theory arose to explain the etiology of contagious diseases), doing the wrong thing, experiencing strong emotions, inappropriate bodily discharges (for example those resulting from masturbation), and so on. In this system, there is no practical distinction between morality and mechanism, as personal habits are important to vulnerability; and there is no sharp distinction between mind and body as they interact. General prevention emphasized the value of prudently managing what Galen called the “nonnaturals,” including environmental factors of air, food, and drink, and bodily functions such as exercise, rest, evacuation, and emotion. Medical treatment was geared to a readjustment of the balance, with bloodletting, enemas, and emetics among the physician’s common tools. Ordinary people, who turned to the doctor as a last resort, took a Galenist approach to self-treatment. From the seventeenth century on, a growing number of what would later be called “patent” medicines that had tonic, purgative, stimulant, or sedative properties were widely sold.

Broadly speaking, two developments brought the 1,500-year-old Galenist tradition to an end in mainstream medicine. The first was the idea of disease specificity. Rather than disruptions of the whole body, diseases came to be understood as specific entities with separate and universally identifiable causes and characteristic physiological effects. This notion of disease, which was not entirely new, was medical convention by the end of the nineteenth century. Various discoveries — for example, the postmortem studies of Giovanni Battista Morgagni (1761) and Xavier Bichat (1800) on pathogens attacking particular organs — had been slowly fixing the notion of specific disease, but the decisive change came in the 1880s with the establishment of bacteriology.

The notion that communicable diseases are caused by living organisms (the germ theory) had been in circulation for centuries, but resistance to it finally yielded with a string of breakthroughs from 1860 onward. Louis Pasteur, for instance, showed how sterilization can kill microbes, and Joseph Lister, mentioned above, demonstrated that antiseptic procedures reduce the risk of infections from surgery. In rapid order, Robert Koch isolated the anthrax bacillus, the tuberculosis bacillus, and the cholera bacillus and demonstrated that they are contagious, while Edwin Klebs isolated the bacterium responsible for diphtheria.

Germ theory revolutionized medicine and the very conception of disease. The essential idiosyncrasy of disease is gone; instead, the afflicted individual came to be seen as the “host” for impersonal physiological processes. The historian Charles E. Rosenberg observes in Our Present Complaint (2007), a book about American medicine, that “germ theories constituted a powerful argument for a reductionist, mechanism-oriented way of thinking about the body and its felt malfunctions,” so that the disease, not the patient, now tells the story. Additionally, the development of a whole range of new tools, from the thermometer to X-rays, made it increasingly possible to describe diseases with new and standardized precision. The patient’s own report of signs and symptoms counted for less and less, as did social and environmental factors.

But another idea, more implicit and still little realized at the end of the nineteenth century, was also critical to the overthrow of humoral theory and to the establishment of scientific medicine. This is the idea of treatment specificity. The newly identified bacteria suggested not only that each was associated with its own disease but that each might require specific management, for instance in the form of immunizations. The chemist and founder of chemotherapy, Paul Ehrlich, famously labeled the ideal treatment a “magic bullet” — a medicine that effectively attacks the pathogens in the targeted cell structure while remaining harmless in healthy tissues.

Successful specific treatments captured the public imagination and drastically changed people’s expectations of medicine and doctors. The historian Bert Hansen identifies Pasteur’s 1885 discovery of a vaccine for rabies as a seminal moment in which a medical breakthrough really engaged the public imagination. An incident that year, involving several young boys bitten by a rabid dog in Newark, New Jersey, provided the galvanizing event. A doctor, who knew of Pasteur’s vaccine, wrote to the newspaper that reported the incident, urging that the children be sent to Paris to be treated and that donations be solicited if necessary. The newspaper cabled Pasteur, who agreed, donations flowed in, the four boys were vaccinated in Paris, and none contracted rabies. The story became widely known all over the United States. Subsequent cases of rabies bites met similar demands to go to France, and within a year, domestic clinics sprang up to administer the shots. A threshold was crossed. A few years later when Robert Koch announced a cure for tuberculosis, the discovery was breathlessly reported for months. Though Koch was later proved mistaken, what is striking, as Hansen observes, is the speed and enthusiasm with which everyone — professionals and public alike — embraced the possibility of cures for disease. In 1894, the diphtheria antitoxin was greeted with immediate and widespread publicity.

Advancements in curative medicine continued especially after 1920. By World War II, a tuberculosis vaccine, insulin, and the first widely used antibiotics (penicillin and the sulfonamides), were available, among other new drugs, and having a powerful effect on public consciousness. A 1942 article in Popular Science, reflecting on the sulfa drugs, expresses some of the rising expectations:

The nature of these drugs, and the manner of their application, suggests that the biological revolution is beginning — only beginning — to catch up with the industrial revolution. Heretofore most of the human effort that has been invested in the development of the exact sciences has been devoted to improvements in machinery. Progress in the biological arts and sciences, although extensive, has nevertheless tended to lag behind mechanical progress in precision and certainty.

Growing sophistication in medicine in the following years would bring even higher expectations for its success.

Beginning in the 1940s, researchers became increasingly interested in “multiple cause” approaches to noncommunicable diseases and, in the early 1960s, in “risk factors” — specific exposures that increase the probability of disease but are not in themselves necessary or sufficient to cause it. The subsequent regime of disease prevention concentrated almost exclusively on individual-level “lifestyle” behaviors and consumption patterns, such as smoking, diet, exercise, and so on. This approach did not challenge the existing biomedical model of physiological disease mechanisms but enlarged it. The introduction of a drug for hypertension, increasingly discussed as a disease in its own right, in 1958, and later for other risk factors, helped solidify risk-factor and lifestyle approaches and fuel new medical hope for the prevention and successful management of, if not cure for, chronic conditions.

More recently, gene therapy and stem cell research have become leading repositories of popular and professional enthusiasm for breakthrough cures. In his 2010 book The Language of Life, Francis Collins, director of the U.S. National Institutes of Health and former head of the publicly funded effort to map the human genome, sees a “growing ocean of potential new treatments for diseases that are flowing in from the world’s laboratories, thanks to our new ability to read the secrets of the language of life.” If initial expectations for applications of the new genomic knowledge were naïve, Collins avers, those expectations were not altogether misplaced. With a sustained commitment to vast outlays for research, cures will inevitably follow. The mechanisms can and will be found.

Popular optimism and expectations remain high, if less nourished by any precise sense of historical development. Without too much exaggeration, one might say that medical optimism has been industrialized in recent decades, ceaselessly produced by health groups and media. Private medical foundations, patient-advocacy and medical-identity groups, pharmaceutical and medical-device companies, research universities, television news segments, newspaper health sections, health magazines, Internet health sites, and more all generally speak a buoyant message of unceasing “life-changing advances,” progress toward prevention and cures, and routine “miracles.” Even the producers of scientific research have media relations departments that help popularize the latest studies. Medical hope has become a big business.

In part because of past successes, the biomedical model — with the mechanism-oriented reductionism of disease specificity and treatment specificity — has remained dominant. Here, for example, is how two researchers, writing in Nature Reviews Cancer in 2008, describe the direction for the treatment of cancer, now viewed as a multifactorial disease: “We foresee the design of magic bullets developing into a logical science, where the experimental and clinical complexity of cancer can be reduced to a limited number of underlying principles and crucial targets.” The new paradigm “is the development of ‘personalized and tailored drugs’ that precisely target the specific molecular defects of a cancer patient.”

The debate over the fifth revision of psychiatry’s Diagnostic and Statistical Manual of Mental Disorders is another example. Many in the field argued for a move away from heuristic categories focused on symptoms of psychiatric illness, preferring instead a medical model focused on mechanisms that can be identified through neuroscience and molecular genetics, have laboratory or imaging biomarkers, and be subject to manipulation by specific treatment interventions. According to the first chapter of a leading psychiatry textbook, the goal is a “brain-based diagnostic system.”

The promise of specific treatment is a crucial element of the enduring appeal of disease specificity and reductionism. But it is not the only one. Precise and specific disease categories are now woven into every feature of medicine, from structuring professional specialization, to doctor-patient interaction, to research and clinical trials, to all aspects of the centralized and bureaucratic delivery and regulation of health care. Even the day-to-day management of hospitals is organized around specific diagnostic categories, and all the readings and statistics and codes and protocols and lab tests and thresholds and charts take their meaning from them and reaffirm their central place in how we think about medicine.

Therapeutic promise and bureaucratic need, while important, are not the whole explanation for why mechanism-based medicine has achieved its high status in contemporary society. More is at work. This type of medicine also bears a distinct cultural authority, which, following the sociologist Paul Starr, involves conceptions of reality and judgments of meaning and value that are taken to be valid and true. At stake is the power to pronounce and enforce agreement on definitions of the nature of the world, and the status of particular facts and values. No single feature can account for this power, but one critical element lies in the nexus between medicine and liberal society.

Modern Western societies, and perhaps most strongly the United States, recognize two unequivocal goods: personal freedom and health. Both have become ends in themselves, rather than conditions or components of a well-lived life. Neither has much actual content; both are effectively defined in terms of what they are not and what they move away from, namely constraint and suffering. As such, both are open-ended, their concrete forms depending on particular and mutable circumstances that can only partially be specified in advance (against certain conditions of exploitation, for example, or disease).

Personal freedom is the hallmark of liberalism — the “unencumbered self,” in Michael Sandel’s felicitous phrase, acting on its own, inhibited by only the barest necessity of social interference or external authority, and bearing rights to equal treatment and opportunities for social participation and personal expression. This free self still assumes some reciprocal responsibilities, including respecting the rights and dignity of others and working out one’s self-definition and lifestyle in a personally fulfilling and generative fashion.

Since at least the nineteenth century, health has contended with freedom for pride of place as the preeminent value in Western societies. The preoccupation with health has only intensified in recent decades, with some even speaking of the emergence of the “health society.” The active citizens of the health society are informed and positive, exercise independent judgment and will, and engage experts as partners in a kind of alliance relationship. The ideal is to live a “healthy lifestyle,” which prioritizes the avoidance of behaviors correlated with increased risk of disease, such as smoking, and the cultivation of a wide range of “wellness activities,” including a carefully crafted diet and a vigorous exercise regimen. The concern with health is more than a matter of avoiding illness, though it certainly includes that. It is also a means of moral action, a way to take responsibility for oneself and one’s future and confirm one’s solidarity with the values of a good society. The mass production of medical optimism, as well as the dissemination of research findings and how-to advice, serves as an important and necessary backdrop, urging individuals to place their hope in professional expertise and self-consciously reorder their daily lives in light of the latest information and findings.

Medicine is deeply implicated in the cultural priorities of autonomous selfhood and optimized health. Both priorities have a central concern with the body. Of course, the link between medicine and the body is obvious in the case of illness. But that is only the beginning. The body has also become a site for projects of emancipation and the construction of selfhood and lifestyle. “Biology is not destiny” was a rallying cry for the unmaking of traditional gender roles a generation ago, part of a broader challenge to bodily boundaries and limitations once regarded as simply given or natural but increasingly seen as oppressive and inconsistent with free self-determination. Destiny has been overcome by technological interventions that severed “fateful” connections (such as between sexuality and reproduction) and that opened up an increasing range of bodily matters to choices and options — the shape of one’s nose, a tendency to blush, baldness, wrinkles, infertility.

These enhancement uses of medical technology are only one way in which medicine is now interwoven with cultural ideals of self and health. The Baconian legacy established the background assumptions that situate medicine in service to self-determination, emancipation from fate, and relief of suffering; and reductionist, mechanism-oriented medicine offers powerful means to achieve these goals. These humanitarian commitments are open-ended. While it is tempting to think that the boundaries of disease itself (or anatomical or molecular abnormalities) would constitute a limit, they do not. Many routine medical interventions are performed merely to ease the discomforts of everyday life, life processes, and aging and have little to do with disease.

If not disease, then surely bodily interventions constitute the limits of medicine’s commitments? Already in the seventeenth century, as we have seen, biological reductionism was gaining a hearing, and it became increasingly solidified in the nineteenth and twentieth centuries. Psychiatry would seem to offer the clearest exception. Virtually none of its hundreds of specific disease categories has any known etiology or pathophysiology. But as Thomas Sprat predicted, the soul has entered the scientific agenda. Nineteenth-century neurologists, like George Miller Beard with his diagnosis of neurasthenia (“tired nerves”) or the young Sigmund Freud, saw themselves as dealing with biological phenomena. Contemporary neuroscience resolves the mind-body problem by treating mind as an emergent property of the hierarchical organization of the nervous system. So, as noted earlier, the lack of known mechanisms, the de facto deviation from the biomedical model, is viewed by many in psychiatry as a temporary situation, a sign that the science is still in its early stages and that with the advance of science the neurological and genetic mechanisms that underlie psychiatric disorders will be discovered. It’s only a matter of time.

The body, then, does represent something of a conceptual limit, but as the example of psychiatry suggests, it is not a practical limit. Intervention need not wait for biology. A great many drug discoveries have been serendipitous; that they work for some desired purpose is far more important than why they work, and their use in medicine has proceeded despite the failure to understand them. Further, by the specific treatment rationale — wherein predictable treatment response implies an underlying mechanism — the clinical effects of drugs are often taken as evidence that something is awry in the body. This, for example, is what the psychiatrist Peter D. Kramer meant by the title of his bestselling book Listening to Prozac (1993). The intervention may supply the evidence for the biology.

In the absence of actual limits, medicine’s open-ended commitment to foster self-determination and relieve suffering, as woven together with cultural priorities of self and health, draws it into treating an ever-wider range of concerns and complaints. As autonomy has become more of a cultural ideal, so limitations on autonomy are felt to be a burden, and medicine is called upon to relieve this burden. This goes for virtually any attribute that an individual might regard as inhibiting: short stature, anxiousness, shyness, perfectionism, low task-specific energy or concentration, insufficient libido, and much more. So too with troublesome emotions and with various role conflicts or inadequacies, such as in parent, spouse, student, or employee roles. Intervention in these matters is considered legitimate medicine because it reduces the patient’s burdens.

Medicine is also increasingly called upon to resolve many issues of difference and deviance from social norms. While it may be that some of this type of medicalization is the result of an aggressive expansionism on the part of medicine or the “medical-industrial complex,” there is no question that medicine has been and continues to be drawn in where other cultural institutions have already largely disappeared. Case in point: the elimination in the fifth edition of the Diagnostic and Statistical Manual of Mental Disorders of the “bereavement exclusion” from the diagnostic criteria for depression. Previously, depression was not to be diagnosed if an individual had lost a loved one within two months, because grief and depression have similar symptoms and grief is not a mental disorder. The Mood Disorder Work Group for the DSM-5, which originally proposed the change, argued that some of what looks like normal bereavement is actually depression, and a failure to diagnose would to be to deny needed treatment. Critics, on the other hand, contended that removing the exception would be pathologizing normal behavior. My point is just that it is very unlikely that this discussion would even be taking place if the larger communal system of customs and rituals that once defined and guided mourning had not already collapsed. Experiencing grief as a primarily private burden, individuals seek out antidepressants; psychiatrists, being “clinically proactive,” want to make the diagnosis and provide the treatment. The issue here is not so much that psychiatry seeks this role as it is that when people seek clinical help, it is exceedingly difficult to refuse. Doing so can seem insensitive, even cruel, a failure to discharge medicine’s mandate.

Besides relieving suffering and enhancing self-determination, there is another reason why medicine has come increasingly to manage issues of difference and deviance. Medicine, it hardly needs saying, has no answers to existential questions or social problems. What it offers is something different; indeed, something better, from the point of view of a liberal order in which the dominant idea is that each person’s good is a question of his or her own convictions or preferences. Medicine offers (seemingly) objective, value-free modes of discourse that can bypass conflicting conceptions of the good, offer plausible “accounts” for behavior and emotion that place persons within a positive narrative trajectory, justify intervention and certain exemptions (for example relief from social responsibilities), and decrease stigma by qualifying, though not eliminating, thorny questions of responsibility for oneself.

These are powerful modes of discourse, with profound implications for how we understand ourselves and our world. On the one hand, a reduction to biological malfunction recodes bodily states, behaviors, or emotions as morally neutral objects, distinct from the patient’s self and causal agency. The language of the body is not a moral language, and the language of health needs no justification; it is by definition in the patient’s own and best interest. Qualitative, evaluative distinctions — about what deviance is considered an illness, about what constitutes appropriate intervention, about what features of individual lifestyles will constitute risks, and so on — are in fact made, but they take place in bureaucratic and professional contexts far upstream of the clinic. In the actual clinical interactions, the language is not of judging but of diagnosing, not of moral failures but of disorder mechanisms, not of social problems or exploitative structures but of individual illnesses. All moral, social, or other normative evaluations, all affronts to the patient’s autonomy and self-image, appear to have been excluded, all questioning of legitimacy put off the table.

On the other hand, the key to this clinical truth and cultural authority is the reduction to the body. So the biomedical model speaks only that truth about illness, behavior, and emotion that can be linked to the body, and the tighter the linkage (physical measurements are the gold standard), the more legitimate the illness. Without a clear biology, questions of culpability and malingering and social influences may be and often are reasserted. This is another reason why psychiatry so doggedly pursues neurology and genetics to the exclusion of other explanatory approaches. It is why medical interventions, when they enforce norms on deviant behavior, are often controversial. A prominent example is the argument over Attention Deficit Hyperactivity Disorder (ADHD) and psychostimulant treatment for dealing with the dual imperatives of education — docility and performance. The debate over classifying obesity as a disease is another. The reduction to the body is why patient advocacy, medical specialty, and social movement groups lobby for certain categories as legitimately physical, like post-traumatic stress disorder or chronic fatigue syndrome, and why they can lobby against others as illegitimately influenced by social norms, such as homosexuality or masochistic personality disorder. It is why psychosomatic disorders have a low status and why there seems to be a constant need to remind people that depression or social phobia or most any psychiatric disorder is a “real disease.” The biomedical model leaves some things out, thereby consigning them to the imaginary, the ambiguous, the subjective, the culpable, and the not quite legitimate. The power to dispose is also part of its cultural authority.

The authority of the reductionist biomedical model helps to explain why its critics are attracted to more holistic approaches — but also why they fail to take hold. Charles Rosenberg, considering the question of the failure of integrative approaches in both the recent and more distant past, argues: “The laboratory’s cumulative triumphs have made this holistic point of view seem not so much wrong as marginal, elusive, and difficult to study in a systematic way.” But that is only part of the story. A comprehensive theoretical model that could integrate biological and psychological, as well as social and environmental, underpinnings of disease would still run up against serious problems. The cultural priorities of autonomous selfhood and optimized health are deeply individualistic, and the reductionist biomedical model and lifestyle approach offer an important moral and philosophical grounding for individualism that a holistic model would be far less likely to produce.

For much the same reasons, we can see why we invite medicalization. On the individual level, a specific diagnosis provides a predetermined narrative that can decrease the burden of responsibility, account for problematical experience, justify exemptions from social expectations, and offer a positive prognosis and access to treatment, all within a seemingly value-neutral framework. Access to medical technologies for emancipatory and lifestyle issues is another incentive, one that in the liberal health society can become an obligation. The relentless yearning for control, coupled to the optimism industry, makes even the contemplation of therapeutic limits difficult to accept.

We can also see why, with respect to socially problematic behaviors or emotions, postulating a specific disease and biological mechanism and calling for medical remedies have a powerful appeal and might even be regarded as a matter of justice. Granted, medical experts will often be hesitant to accept a proposed specific disease as legitimate when its biological course is not clearly known. But biological explanation for the disease can be finessed, and the cultural authority at work here is such that once a useful disease category is created, it takes on a life of its own. Skepticism may not be entirely silenced, but effective resistance is far less likely. Again, ADHD — with no agreed-upon etiology or pathophysiology and a long history of public controversy and skepticism, yet skyrocketing rates of diagnosis and treatment — is a case in point.

Of course, in tracing this history of the biomedical model, I have said relatively little about the role of commercial forces and political institutions in promoting a reductionist perspective of health and illness. My point was not to deny their importance. But I have stressed the moral and cultural power of reductionist medicine to bring into relief a critical feature that is typically relegated to an afterthought. The appeal of reductionism has deeper roots than any specific government policy or commercial strategy. And it remains strong despite widespread skepticism about its technological and diagnostic overreach, sharp critiques of the “medical-industrial complex,” persuasive demonstrations of the social determinants of health and illness, and a decline in the public trust in medical experts. If we are to make progress in a more holistic direction, the question of cultural authority — ultimately an ethical question — will have to be addressed.

During Covid, The New Atlantis has offered an independent alternative. In this unsettled moment, we need your help to continue.